Any Deep Learning classifier is a form of Logistic Regression

Published:

Claim: Any deep learning classifier is a form of logistic regression. Click to see the reasoning behind this.

Really? Yes 😀

To explain this, we first briefly review the logistic regression, then explain this.

The logistic regression model arises from the desire to model the posterior probabilities of the classes $p(y\vert\mathbf{x})$ via linear functions in $\mathbf{x}$, while at the same time ensuring that the probability of the classes sum to one and remain in range $[0,1]$ (Ref)

The logistic model parameterizes the class-posterior probability $p(Y=y\vert\mathbf{X} = \mathbf{x})$ in a log-linear form as

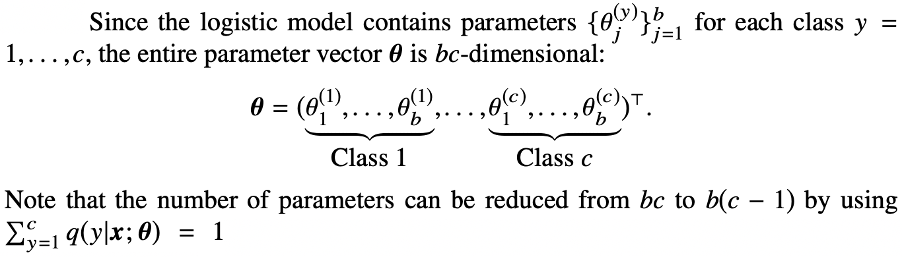

\[q(y|\mathbf{x}; \boldsymbol{\theta}) = \frac{e^{\sum_{j=1}^b \theta_j^{(y)} \phi_j (\mathbf{x})}}{\sum_{y'=1}^c e^{\sum_{j=1}^b \theta_j^{(y')} \phi_j (\mathbf{x})}} = \frac{e^{\boldsymbol{\theta}^{(y)} \boldsymbol{\phi} (\mathbf{x})}}{\sum_{y'=1}^c e^{\boldsymbol{\theta}^{(y')} \boldsymbol{\phi} (\mathbf{x})}}\]Where $c$ is the number of classes, $\boldsymbol{\phi} (\mathbf{x})$ is a vector resulting from transforming data point $\mathbf{x}$, and $b$ is the dimension of this vector. $\boldsymbol{\theta}$ is the vector of parameters. We note that the model is still linear in the parameters despite the non-linear transformation $\boldsymbol{\phi}$ that we might have on data. We also note that we form such posterior probability for each class $y$. That’s why we have superscript $(y)$ for parameter $\boldsymbol{\theta}$, i.e. $\boldsymbol{\theta}^y$. (Ref)

This models the probability that, for a given sample $\mathbf{x}$,the label would be class $y$. We calculate these probabilities for all the classes, and pick the one that has the highest probability (Ref).

The parameter $\boldsymbol{\theta}$ is learned by the MLE. More specifically, $\boldsymbol{\theta}$ is determined so as to maximize the log-likelihood, i.e. the log-probability that the current training samples $(\mathbf{x_i}, y_i)_{i=1}^N$ are generated ($N$ is the number of samples) (Ref):

\[\boldsymbol{\theta}^{\star} = \mbox{argmax}_{\boldsymbol{\theta}} \sum_{i=1}^N \mbox{log} \ q(y|\mathbf{x}_i; \boldsymbol{\theta})\]Now that we detailed the logistic regression, let’s take a look at a neural network that is used for binary classification. For the case of multi-class classification is similar, but slightly different.

The last layer of the NN is a single node with the logistic function as its activation function. Assume that the parameters of the last layer of the model is $\boldsymbol{\theta}$, and the input of this layer is shown as $\mathbf{h} (\mathbf{x}, \boldsymbol{\gamma})$, which is the result of the previous layers, where the $\boldsymbol{\gamma}$ is a vector of the combination of the weights of previous layers. One can see that if we replace $\boldsymbol{\phi} (\mathbf{x})$ in the logistic regression above with $\mathbf{h} (\mathbf{x}, \boldsymbol{\gamma})$, we can see that the NN acts as a logistic regression that performs a complicated transformation of the data from the first layer to the one layer to the end.