Why Deep Learning

Published:

Deep learning has dominated the field of machine learning and artificial intelligence. But why? The reason is that it is simple so that many non-professionals can learn how to work with it in a short amount of time, and yet it is very effective, capable of solving problems that by no means was possible using classic methods.

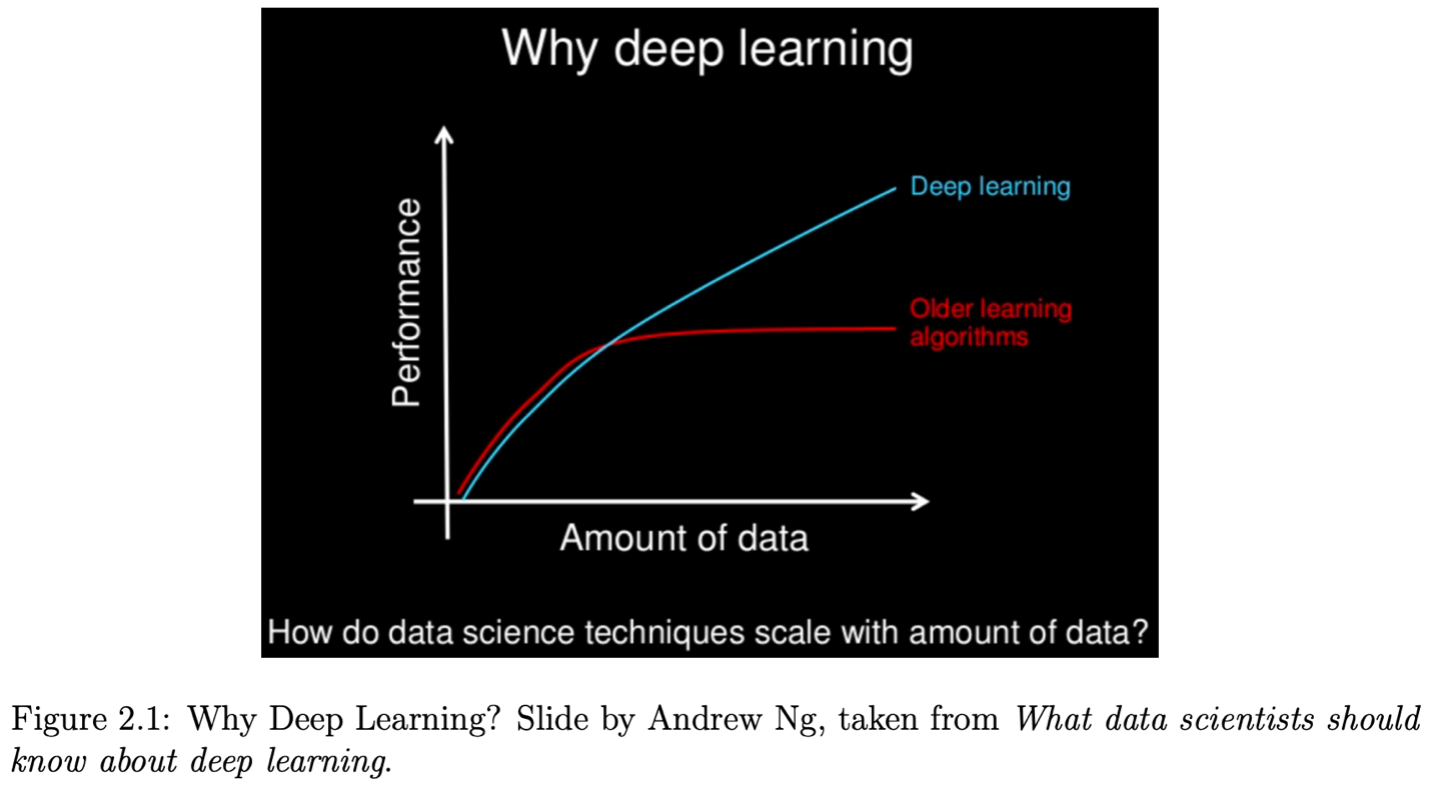

For most flavors of the old generations of learning algorithms, if we increase the amount of the data, the performance will plateau after certain point. Deep learning, however, is the first class of algorithms that is scalable and its performance just keeps getting better as you feed them more data. (ref) The following figure is from the same reference (ref).

Deep learning excels at unstructured data such as images, sounds, and text. (Ref)

Almost all the value today of deep learning is through supervised learning or learning from labeled data (comment by Andrew Ng) (ref).

One reason that deep learning has taken off like crazy is because it is fantastic at supervised learning (comment by Andrew Ng) (Ref).

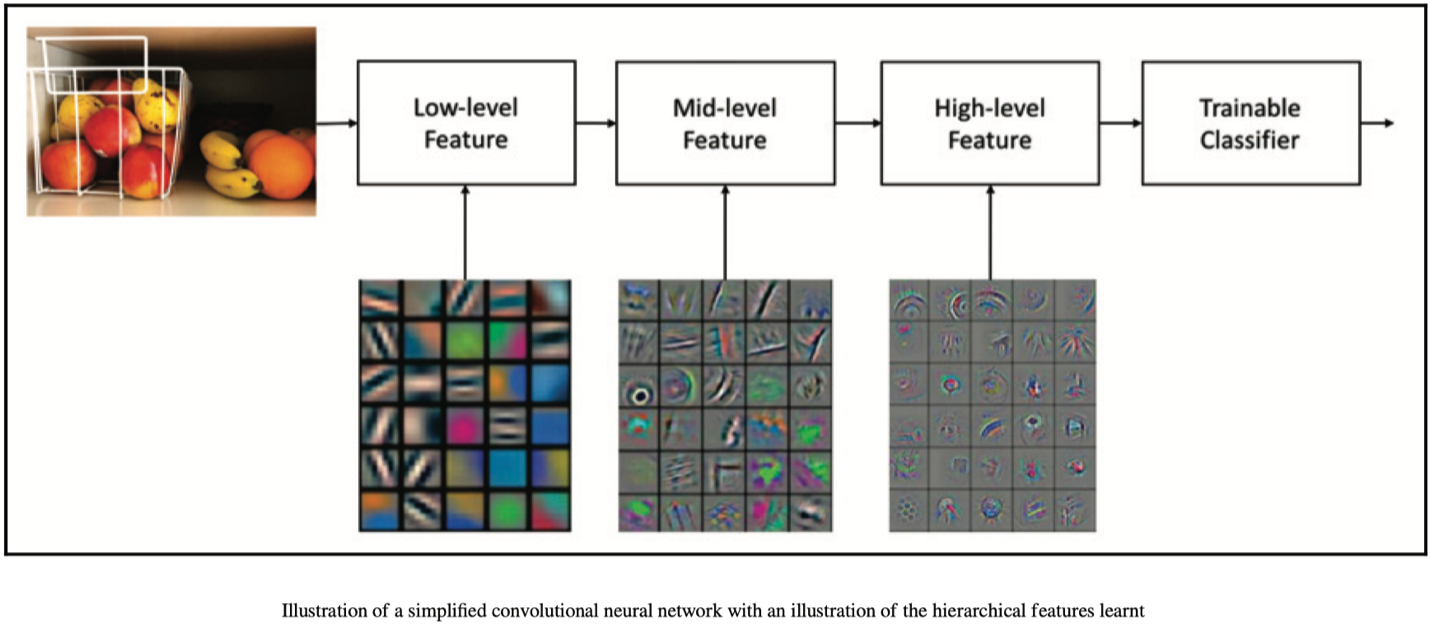

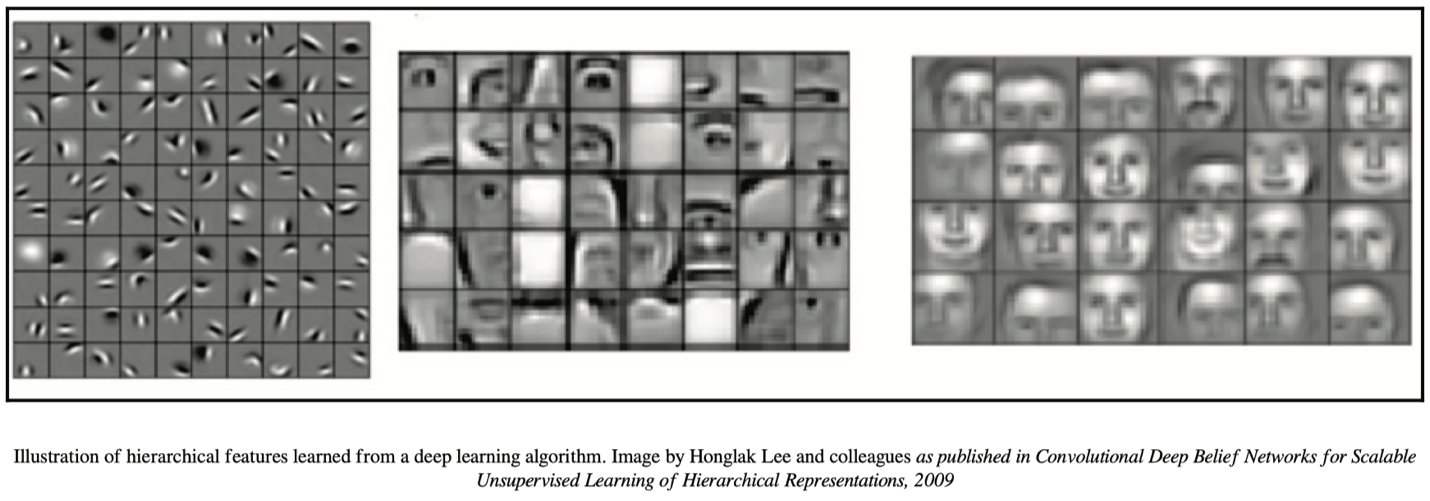

In addition to scalability , another often cited benefit of deep learning models is their ability to perform automatic feature extraction from raw data, also called feature learning.

Deep learning methods aim at learning feature hierarchies with features from higher levels of the hierarchy formed by the composition of lower-level features. Automatically learning features at multiple levels of abstraction allow a system to learn complex functions mapping the input to the output directly from data, without depending completely on human-crafted features. (Ref)

Another interpretation of deep learning is that the deep learning is a kind of learning where the representation you form have several levels of abstraction, rather than a direct input to output. (Ref)

Deep-learning methods are representation-learning methods with multiple levels of representation, obtained by composing simple but non-linear modules that each transform the representation at one level (starting with the raw input) into a representation at a higher, slightly more abstract level. The key aspect of deep learning is that these layers of features are not designed by human engineers : they are learned from data using a general-purpose learning procedure. (Ref)

In deep learning, the learning rate is presented as the most important parameter to tune. Although a value of 0.01 is a recommended starting point, dialing it in for a specific dataset and model is required. (ref)

In theory, a single or two-layer neural network of sufficient capacity can be shown to approximate any function in theory. (ref)

In Deep Learning, different neurons look for different features in the input.

Each neural network layer is a "feature transformation". (Ref)

A deep neural network where there is no activation function in any neuron is just a linear function. For example, in the below figures this is explained for the case of two-layer network. For more layers the reasoning is similar. (Ref)

Hierarchical Feature Learning

Using stacked layers, researchers noticed that neural networks are able to learn hierarchies of features. Each layer seemed to be learning something more complex than the last. And this was the motivation for making neural networks deep, and that's why this field is called deep learning. (Ref)

Deep learning learns hierarchical structures and levels of representation and abstraction to understand the patterns of data that come from various source types, such as images, videos, sound, and text.

This concept is shown in the following figures for image data (Ref):

Automatic Feature Engineering

One of the biggest advantages of deep learning is its ability to automatically learn feature representation at multiple levels of abstraction. This allows a system to learn complex functions mapped from the input space to the output space without many dependencies on human-crafted features. Also, it provides the potential for pre-training, which is learning the representation on a set of available datasets, then applying the learned representations to other domains.

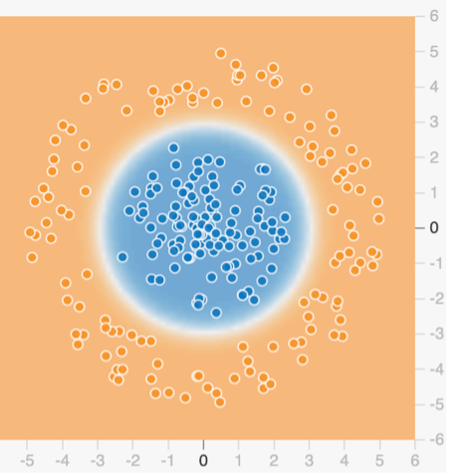

Now, why automatic feature engineering is important? Imagine that you want to learn a nonlinear function such as a circle. You have two options: The first option is to use a simple learning model (e.g. linear classification model) but through feature engineering pass complicated forms of features as input features. For example, we can pass the quadratic terms of features as new features. This is called feature engineering. This becomes for example:

\(\hat{y}=w_1 x_1 + w_2 x_2 + w_3 x_1^2 + w_4 x_2^2 + w_5 x_1 x_2\)

Note that the original features are $x_1$ and $x_2$, but we added the quadratic terms $x_1^2, x_2^2$, and $x_1 x_2$ , and so that we are able to use the linear regression model but are able to catch the quadratic terms! The result would be something like the following:

Now note that this is very troublesome and requires strong domain knowledge, something that is not available all the time. Also, there are too many possibilities for the features. For example, a key feature might be in the form of $sin (x_1 + x_2^4)$. To capture this feature in this approach (using simple learning model, feeding engineered features) we have to consider a long list of features, that in practice is not possible.

A second approach is to use complicated model that can learn features automatically (i.e. deep neural networks) to do this automatically.

Note that prior to deep learning, some applications such as building image classifiers for long cancer detection were done only by experts in that field because they could only perform the feature engineering (i.e. only they know what is important to pass it as input features through feature engineering). But with the development of the deep learning and due to the automatic feature engineering in deep learning, many new developments in that area is done by researchers from other fields that do not have expert domain knowledge of that field. Deep learning allows people who are not too many experts to build models. Nowadays non-radiologist can build a state of the art image classifiers for medical diagnosis. (Ref)

Note that feature engineering still can help deep learning boost its performance. well-crafted nonlinear features could take the burden off nonlinear models such as deep learning. However, for the case of deep learning, the importance of the feature engineering is less than that of other nonlinear models.