Bayesian Bandit Recommender System.

Published:

We have several bandits that we do not know the success rate of any of them. We want to implement an algorithm that automatically balances the exploration-exploitation, and achieves finds the optimal bandit for us.

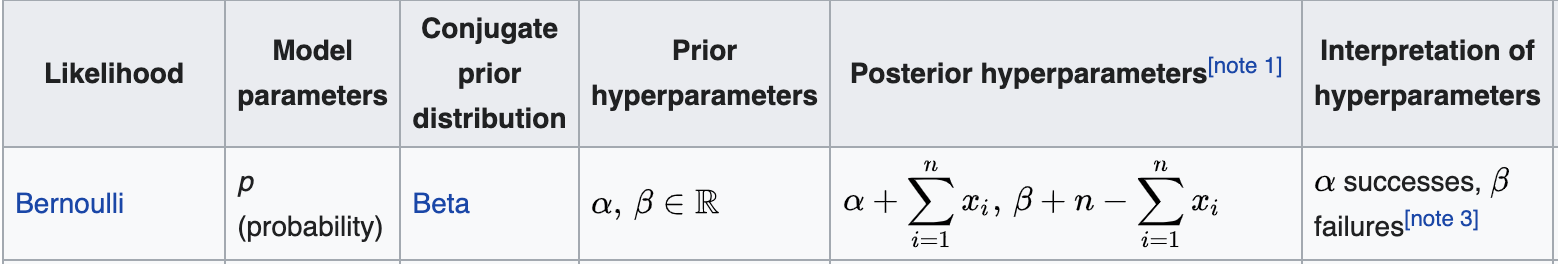

Here we assume that the prior is Beta distribution and the likelihood function is Bernoulli. Hence we have conjugate prior, and we can calculate the closed form formula for the posterior using the following formula (Ref):

Codes & Results

The results are shown in the following:

As you can see the algorithm spends little experiments on the sub-optimal bandits, and the majority of the experiments are performed on the optimal bandit.

To see the Github repository for this project, see Github.